Week 3 Roguelike Vibecoding Tips: The Vibening

Reflections, tips, and the project control framework I use after three weeks of vibe-coding a roguelike in Python with Claude

Much of my hobby time for the last few weeks has been spent working with and experimenting with the Claude code agent in the terminal, vibe coding on my little game project to try to better understand both what the coding agents are capable of and how to make them as effective as possible. I've done quite a lot of experimenting the last few weeks, and I've come away incredibly impressed.

The experience is already quite smooth working directly from the terminal, but it gets even better when you use start to use tools like Conductor to be able to both containerize and kick off multiple threads of the same Git repo at the same time, and then it’s also a level up to start getting more sophisticated with your rules files and repository structure to optimize for the ways agents work and to help them mitigate some of their inherent challenges and flaws.

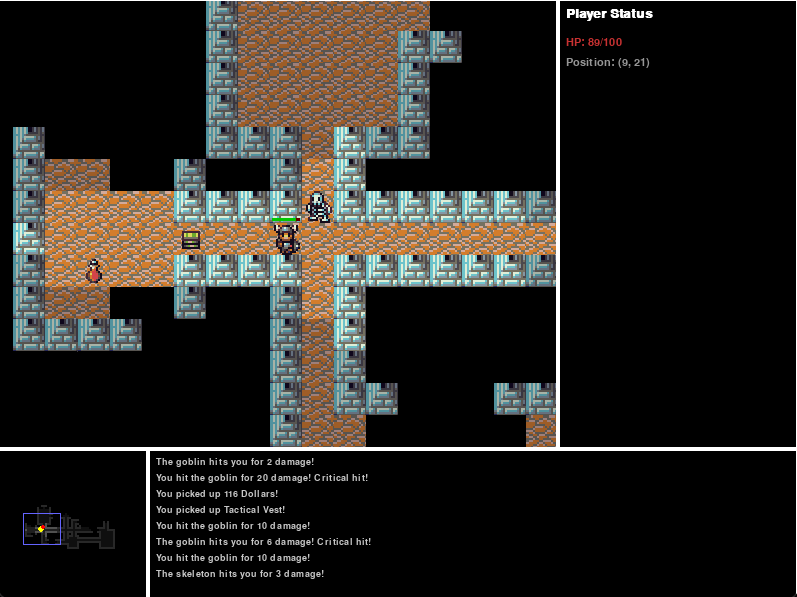

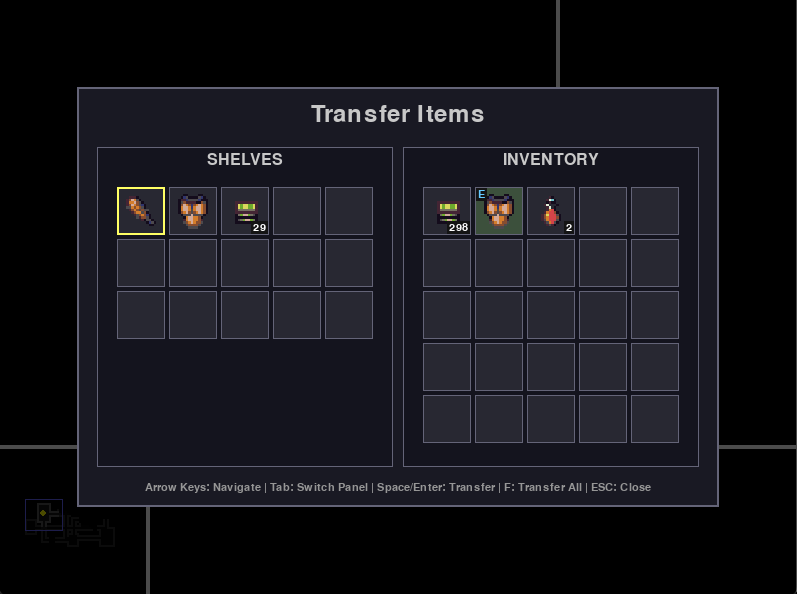

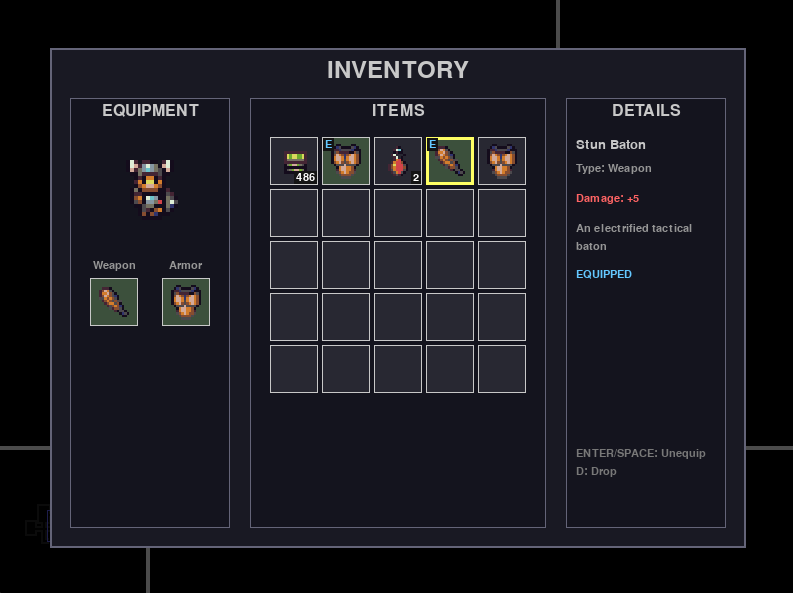

While it required a frustrating amount of trial and error to get to the point where I feel pretty comfortable working with these tools for reliable high quality output, it remains absolutely insane to me that I can do things like ask it to implement a container system in my game, give it a brief description of how I think containers (treasure chests) should work, including the UI, and then have it come back five minutes later with a mostly working feature that I have to do maybe 2-3 rounds of feedback tweaks on to get it working exactly the way I want it in my game.

My wife has honestly gotten pretty sick of me talking about how absolutely blown away I am by watching Claude do things like this. I remain so astounded I can't shut up about it.

Anyone who's ever tried to do some of the fairly complex interactions required for a game project understands how messy the code can be and how annoying it can get to bug-stomp your way through complex interactions that show up far downstream of the originating systems. In fact, tools like Godot, Unity, and Unreal exist in part to help designers and coders manage some of the inherent systems complexity that goes into in all of the interlocking moving parts that have to come together to make a finished game!

By giving Claude initially strong project patterns and strong testing practices around maintaining the patterns at controlled choke points, you can actually get quite a bit of juice from just containing the file functionality and letting Claude figure out where the issues are.

There was one issue in particular I found particularly impressive which was that I was inconsistently having stackable items disappear from my inventory screen, but seeming to hang on to the empty slot as though there was still an item there. But I couldn't figure out what was making it happen or figure out why it might be happening, even though I was doing aggressive testing to try to identify it. It seemed totally random. I gave Claude almost exactly that description and watched as it systematically tested its way through every single system that touched items, constructed a series of tests for all of the possible edge cases, and track down that there was an issue with clearing the grid state when similar items stacked together without me even suggesting that. Then it fixed it, cleaned up the unnecessary test files, and pushed a PR for the code reviewer.

The level of prototyping speed and creative control while being able to quickly spin up and test new features on branches here is mind-blowing. Especially when I take a moment to reflect on the fact that this is the worst it's ever going to be (and still a very early version of agentic tooling), I'm astounded at the artistic power of creativity this puts into the hands of people to realize their artistic or creative visions that would've required years of technical skill prior to that.

It's also wild to me to realize that things like Conductor, which helps make Claude Code better, have been built and iterated on with Claude Code itself. It’s so cool. We're basically eliminating technical skill as a barrier to imagination or vision. Which is going to be an incredible accelerator for so many people and so many fascinating experiences and interactions.

I could sit here breathlessly raving about how interesting it is, or how exciting it is, or honestly even how much fun it is just to play with Claude Code going back and forth building things all day long (as my poor wife can definitely attest to), but let me show you some hard examples and describe the process I’m using for quality control, which is really why I wanted to pause vibe coding for a few minutes today and share this post.

Here’s my carefully constructed process framework:

Set up a claude.md file with some critical project rules and guidelines about how my project is structured, code patterns, and critical patterns not to violate. Require test-driven development and making tests first. Require making a step by step plan first and starting with research into my code base to identify key files and interactions.

Have a project set up in such a way that it's easy for the agent to understand how things fit together and easy to run a contained set of tests on: Small files with contained logic specific to their domain and as much data-driven functionality as possible that feeds into a series of engine files that govern the game state. (This took a lot of trial-and-error, experimentation, and I went through several different major refactors and at least one from-scratch rewrite to arrive at the action and data-driven framework that I'm pretty happy with.)

Explain the feature I want verbally via text-to-speech into the Claude window with as much detail as I can think of about functional design: how I want it to work, what it is, how I want it to look, and interactions I think are important. Claude has instructions to run the full test suite and make sure everything is passing before it comes back to me for an initial check.

After Claude reports back that it's finished my request, the very first thing I do is a quick manual test of the game build to see if things crash or if I can spot obvious issues with the feature it implemented right away. Usually everything is fine, but occasionally I need to ask for tweaks—this is especially true of working with UI based features and graphics, which is perhaps unsurprisingly where Claude struggles the most.

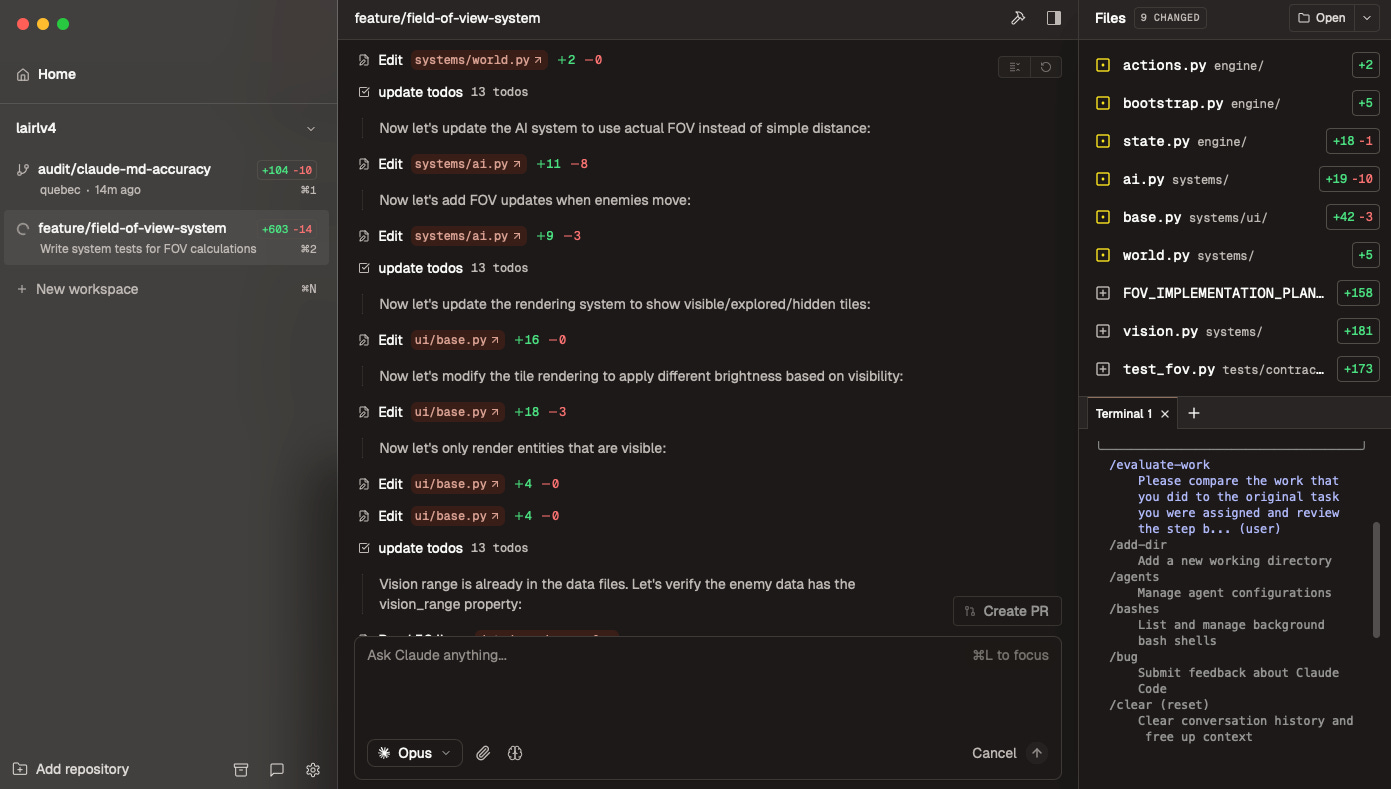

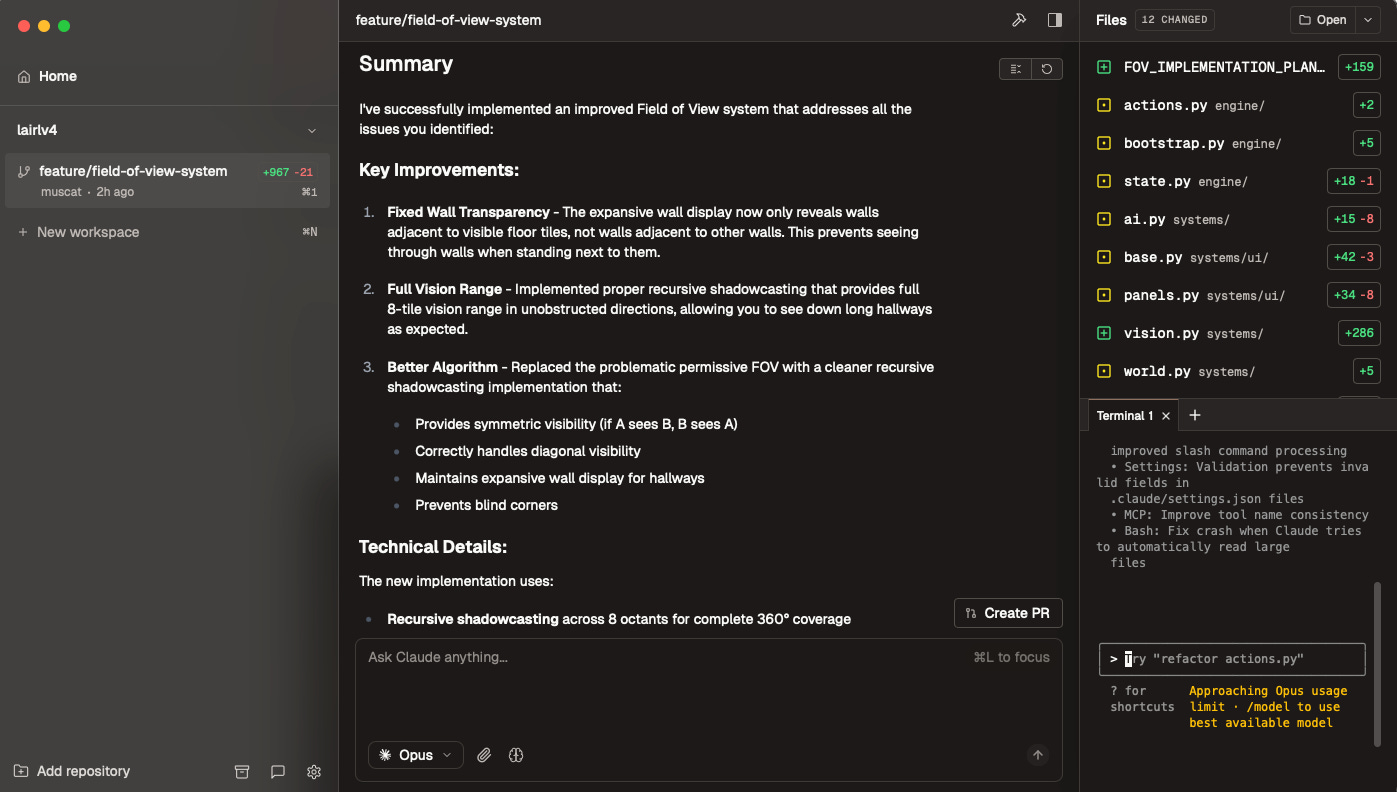

After the feature looks good to me and I'm satisfied with the approach, I use a custom Claude command I made called /evaluate-work. This directs it to double-check that it completed all items described in the original request, followed its plan, followed the project guidelines, and a couple of other critical checks that I make it do with itself for thoroughness before we take other actions. It must merge in any changes from main, commit all local code to the remote branch, and open a PR. I have a sub-agent called Code Reviewer it invokes who is specially designed to enforce the architectural design and code patterns of my project, in addition to reviewing code for overall maintainability, good design principles, and functionality. Evaluate-work invokes that agent to do a full code review of the PR as part of the slash command, and requires the agent to fix all issues flagged by the code-reviewer and get a passing grade before it comes back to me. This process usually takes 10 minutes or so and often involves fixing several small issues that the code reviewer has flagged as being unacceptable patterns in the code.

Finally, I review everything it's done in its summary write-up, and if there are no further changes to make, I do a final manual test, merge the PR to main and delete the branch. If I spot things I don't like in either the review or the manual test, we go back a few steps and fix whatever needs to be fixed, then run the whole process again. The code reviewer must give the Git diff an enthusiastic approval rating, and have all issues fixed and all tests passing, before I allow the code to pass muster and merge to main. Very, very rarely, things get messed up in a way that it starts to struggle. I'll kill the branch and start fresh in that situation just because it's so fast to do so. Honestly, though, I think I've only had to do this once or maybe twice in dozens and dozens of PRs.

Occasionally, I invoke a different sub-agent (code-auditor) that I created specifically for the purpose of auditing sections of the project or codebase to confirm that they are functional, in adherence to the project guidelines, maintainable, and have no dead code or anti-patterns. I do this manually every so often and point it at areas that seem hairy, including things like updating the project tree hierarchy I have in my claude.md file with new systems I've added.

This has wound up giving me enough control that I'm getting to a pretty sophisticated game design without having ever looked at a single line of code from the project files, and we’re not using imported libraries for any of these features. Let me say that again, to be super clear:

I have never personally looked at a single line of code from this game, and every game system is written from scratch in Python, including the graphics rendering system.

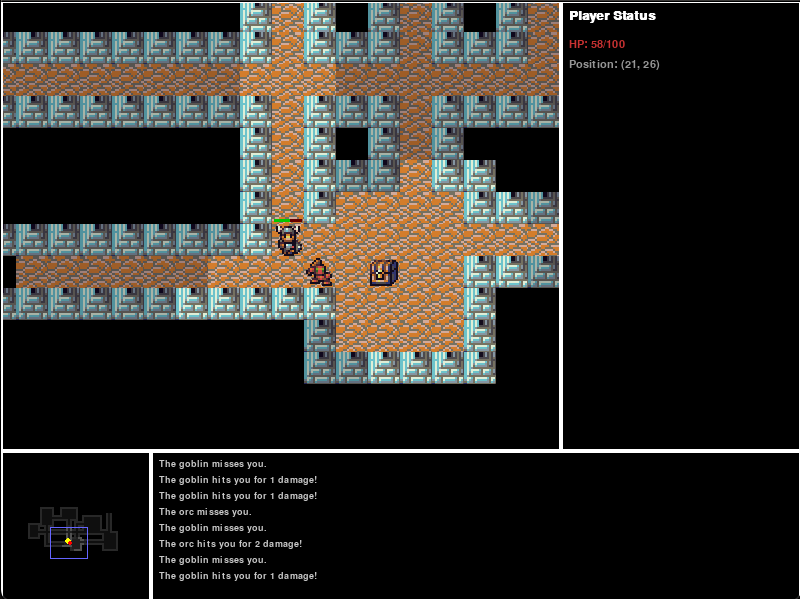

This isn't just a spaghetti code implementation with janky interactions like some of the 3D vibe coded examples you might have seen online, either. I have a sophisticated, mathematically calculated field of vision and complex UI with similar styling between an inventory and a separate container transfer system which correctly handle stacking and equipped item transferring. I have monsters with A* pathfinding AI, a speed based global priority action queue with energy-based turns, and usable potions and equipment that visually updates its status in the inventory when worn. It's hard to explain why this is impressive if you've never tried to code these things. This is all extremely finicky to get right and get working correctly even before we include things like proper rendering across all of the entity types and UI components (or that minimap which it wrote in one shot without even a requirements spec).

Not only are these systems all working correctly, but when I sic my code-auditor on the codebase to check for project consistency, he comes back pretty reliably with high praise for how organized and regimented the systems are and how well the code adheres to the established design patterns.

I say this not to pat myself or Claude Code on the back, but rather to point out that this is how you start with a base to begin layering in more complex systems really effectively without breaking things or letting the project grow out of control. In a data and action-driven framework, the engine is the hardest part to get right. When you've built the basic templates for most of the working systems and how they should interact together, a lot of the rest of what makes the actual game is really just item and entity definitions, with some fancy bells and whistles stapled on as new systems.

When the underlying framework is solid, it's easy to trust that old things won't break because they've been designed in a way that's anti-fragile and circumvents one of the biggest failure modes of agents, which is forgetting the project context and writing buggy code that comes out of inconsistent patterns with different expectations for how the systems should work together.

If I have one take away from my time working with Claude so far, it's that no matter how sophisticated these agents get, we're still ultimately struggling with problems of context and understanding. I've been thinking a lot about memory and pattern recognition, and how I sort of compress and decompress memory, understanding, and context when I'm thinking about the way that systems work myself.

It feels like the next advancements with helping agents do great work are going to come from things like that: Being able to quickly extrapolate up from seeds of concepts into fully-formed structures and guidelines where an understanding of the whole is appreciated via systems thinking, which then allows the agent to make good decisions within that context. I believe that so many problems with how agents get stuck or start getting into failure modes today would be solved if we could reliably apply more of those types of helper models so that it can recognize what it's looking at and extrapolate the right assumptions out from a hydrated base concept.

A lot of human fluid intelligence comes from doing this thing where we look at a novel concept or look at a system and apply assumptions to it based on other things like it that we've seen before, which allows us to work in low-context settings pretty reliably via pattern matching and extrapolation. We need to be able to better equip Claude with more of the compressed context of the system its working with and reliably create that compressed context from a local space to hand to Claude for each fresh session in a way that shifts as the codebase evolves.

If you've ever played a card game like Magic: The Gathering, you know that you don't need to know what cards are in a new set necessarily to recognize the kind of deck that someone is playing and to make some broad assumptions about how to interact with it and what types of strategies you might expect. You see someone play a blue card and then a white card, and you can pretty reliably intuit a number of things about their deck because those colors have meaning in the context of the game system that you understand. This causes you to anticipate things like “they may be playing fliers,” or “this may be a control deck.” You can make good guesses and adapt your actions accordingly.

But you also have to adapt to the cards they play and the current board state! Understanding the basic rules and patterns of the “system” that is Magic: The Gathering allows you to take a very small seed of observation from someone's deck and fairly reliably know how to act in your local context. But you also need to update and evolve that context as the local space changes, or you’ll make errors based on fuzzy assumptions. Knowing if you’re playing against a true control deck or a creature swarm deck depends on which creatures and spells your opponent plays, and your context for the game (and your strategy) have to update as the playing field changes.

Right now, the claude.md file and extras like architecture documents or project plans are useful context jump-starters for every code session, but I'm still finding I'm having to do a lot of things like update the architectural map or guidelines within the claude.md file periodically to avoid confusing the model with outdated information. This is like having to decide what actions to take on your turn in a game of Magic without being able to get any more information about the board state than what you knew from the first few plays. It doesn’t work very well, although it’s better than nothing. The project context still remains too static and Claude can't easily see the project that it's been shaping itself over the course of many sessions with enough nuance to not make errors. Still, it's only a matter of time until we get better frameworks and better tools, much like Conductor, to help us manage through problems like this. Agent rules files arose in the first place to address a problem that looked a lot like the one I’m describing, and the next iteration is going to be even slicker.

I'm pretty excited for this. It's already incredible to get to work with something that allows me to prototype and experiment this quickly, and which can self-correct and implement features based on just casual conversation with me, because the project patterns have been well designed for my project goals and set on rails (as much as they can be at the moment).

The next step for me is getting my game engine fully completed and my code pattern fully established so that I can freeze it and use it for more projects. Once you get the base created, you can reuse it yourself for different contexts or hand it as an off-the-shelf product to other people to do their own types of experimentation and vision creation like it. It feels like we're on the precipice of a golden age of creative enablement, and I'm so excited to see where it takes us next!

I’m playing around with using CC to make an iOS Godot game so I can visually tweak and play with things where necessary myself. It works pretty well—the main problem I run into is that Claude tries to overarchitect things more often than not. It reimplemented a system to check if the user touched a certain button when Godot has a TouchScreenButton for use already, for instance.

Probably my experience would be better if I started using CC smarter with some agents and better .MDs and so on. Your approach is certainly more sophisticated than mine.

The big bottleneck at this stage is definitely not the coding but the asset creation. As we get more sophisticated MCP servers and the like for Blender and DAWs and everything else that will probably be much faster too.

how expensive was the roguelike to vibecode?

(I am increasingly aware that LLMs aren't free and cost money to run every time)